Applied Statistical Computing (R)

The history of computational techniques in informing business decisions commonly known as business analytics began in the late 60s with the rise of rudimentary computers enabling their users to process information faster and more reliably. In particular, these platforms lowered the cost of storage and retrieval of information by using databases from early days, enabled repeated retrial of hypothetical scenarios to simulate numerous possibilities to acquire a quantitative basis for projecting the worse and best case scenarios.

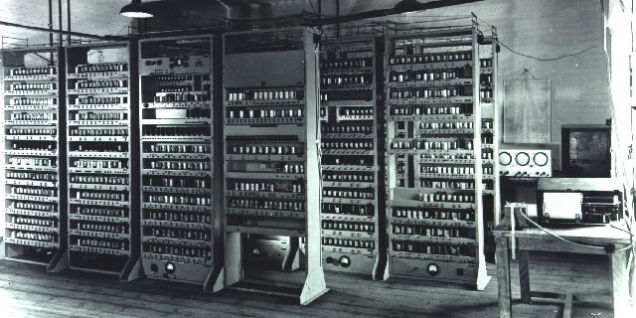

An early example from 1960

In the 1960s only the government and large enterprises could afford computers nonetheless the cost was considered justified comparing to the equivalent processing output per unit of information. The computational technology was considered scalable from early days providing expandable features to grow exceedingly fast further justifying the costs.

In the 1960s only the government and large enterprises could afford computers nonetheless the cost was considered justified comparing to the equivalent processing output per unit of information. The computational technology was considered scalable from early days providing expandable features to grow exceedingly fast further justifying the costs.

Modern financial and business decisions are inevitably dependent on 100s of moving components measured along fast moving settings, thus it is quintessential to equip businesses with the appropriate computational requirements, notably software routines or within the context of this course software code, to efficiently acquire, process and output information.

R Foundation

The R Foundation for Statistical Computing created the software with notable statisticians Ross Ihaka and Robert Gentleman with the first versions appearing in 1993. The software is named after its core creators.

Each software, e.g. R, Python, Matlab, Stata, SAS, etc. offers certain similarities and unique features relative to the alternatives. The choice of the appropriate software platform is often context-dependent and is determined by the software's cost, available resources, processing requirements and processing speed, integration into an existing business universe, etc.

The R software is a freeware and universally considered as one of the top packages in the statistics academic and professional communities. Each software offers a certain degree of flexibility to its users to design bespoke routines based on techniques and resources while also offering pre-defined tools (often meaning lower flexibility) in the interest of obtaining results without re-developing several intermediate phases. For instance, SPSS as an alternative package often used within similar contexts offers tools to obtain results based on fewer steps, while also limiting the users to a set of particular built-in options which may be considered suitable only for certain scenarios.

Spreadsheet platforms are used across several business settings offering quick means to input, process and output information. While these platforms are considered suitable solutions to examine small datasets e.g. <5GB, processing larger scenarios become exceedingly slow and bounded by limited available statistical programming functions. Higher level software packages such as R provide a suitable environment to efficiently load and preserve datasets, develop the software code and apply intended computations and store outcomes into separate spaces. This standardised working routine and use of computer resources enhances efficiently, flexibility and reliability of the overall data handling process and obtaining results with minimal resources (both human inputs and hardware requirements).

During the past decade, statistics show that 18% of businesses primarily using spreadsheets have suffered data losses while 42% and 49% of businesses also reported analytics-based underperformance and excessively costly information processing, respectively, due to the use of spreadsheets for processing large datasets. This undesirable outcome translate to financial losses either directly due to inefficient decision making, loss of clients or operating costs.

TIOBE Software Popularity Index

The R Programming Language has been ranked between numbers 8 and 73 by the TIOBE Index during 2007-2023 with the current standing at 19th position. The ranking captures an overall popularity amongst a wide range of object oriented, database and computational languages. Note while all packages share capabilities to carry out numerical and dataset computations, several of the higher rating languages are often considered less focused on statistical analysis. Integrating the consensus within our contexts together with the TIOBE index ranks R after Python, Matlab, and Fortran.

The R Programming Language has been ranked between numbers 8 and 73 by the TIOBE Index during 2007-2023 with the current standing at 19th position. The ranking captures an overall popularity amongst a wide range of object oriented, database and computational languages. Note while all packages share capabilities to carry out numerical and dataset computations, several of the higher rating languages are often considered less focused on statistical analysis. Integrating the consensus within our contexts together with the TIOBE index ranks R after Python, Matlab, and Fortran.

R is considered a medium-level general purpose software language offering both flexibility and productivity based on available resources. While the software is used across several contexts, it's core uses are within the data exploration, visualization, and statistical analysis thus the existing libraries and functions are further developed around such scopes. Learning the language is not exempt from the process of learning a language in general, which requires memorising the vocabulary and establishing grammatical mechanics and learning by practice.